Query your knowledge base with Generative AI

Use PlayFetch to streamline the connection between your LLMs and vector stores

If you want to give PlayFetch a try, please sign up for early access here. If you’d like to get in touch with us directly, please send an email to hello at playfetch dot ai.

While LLMs are extremely powerful tools with a wide range of applications, they also have inherent limitations that are hard to overcome when using them in isolation. Even the latest generation models are prone to hallucination and have limited context windows. Augmenting those models with new or proprietary data can be expensive and time-consuming, or even completely infeasible, depending on the use case.

Fortunately, these issues can often be mitigated by feeding relatively small chunks of data relevant to the query into the LLM as part of the prompt. This data can then be used to either guide, constrain or augment the responses you get from the generic model.

Of course, retrieving the most relevant data snippets in your knowledge base for an arbitrary query in natural language is not a trivial task to begin with. This is where embeddings and vector stores come in. Embedding is the process of mapping chunks of text to number vectors, and vector stores allow you to efficiently retrieve text snippets whose vectors are “close together” (for some definition of that term). So instead of using a formal language like SQL to make relational database queries, you can use a vector store to retrieve chunks of data that are “similar” or “relevant” to a given natural language query.

Pinecone is one of the more popular vector store options out there today, and we will use their Generative QA with OpenAI example to illustrate how to set up a process as described above in PlayFetch. If you want to follow along, you can create a free Pinecone account and populate a sample vector store with a dataset of YouTube transcripts by running the steps in the Building a Knowledge Base section of their Google Colab project.

In order to enable the Pinecone integration in PlayFetch you need to enter your API key and environment in your user settings. You will also need to provide an API key for OpenAI as we will use their popular text-embedding-ada-002 model to map our questions to vectors:

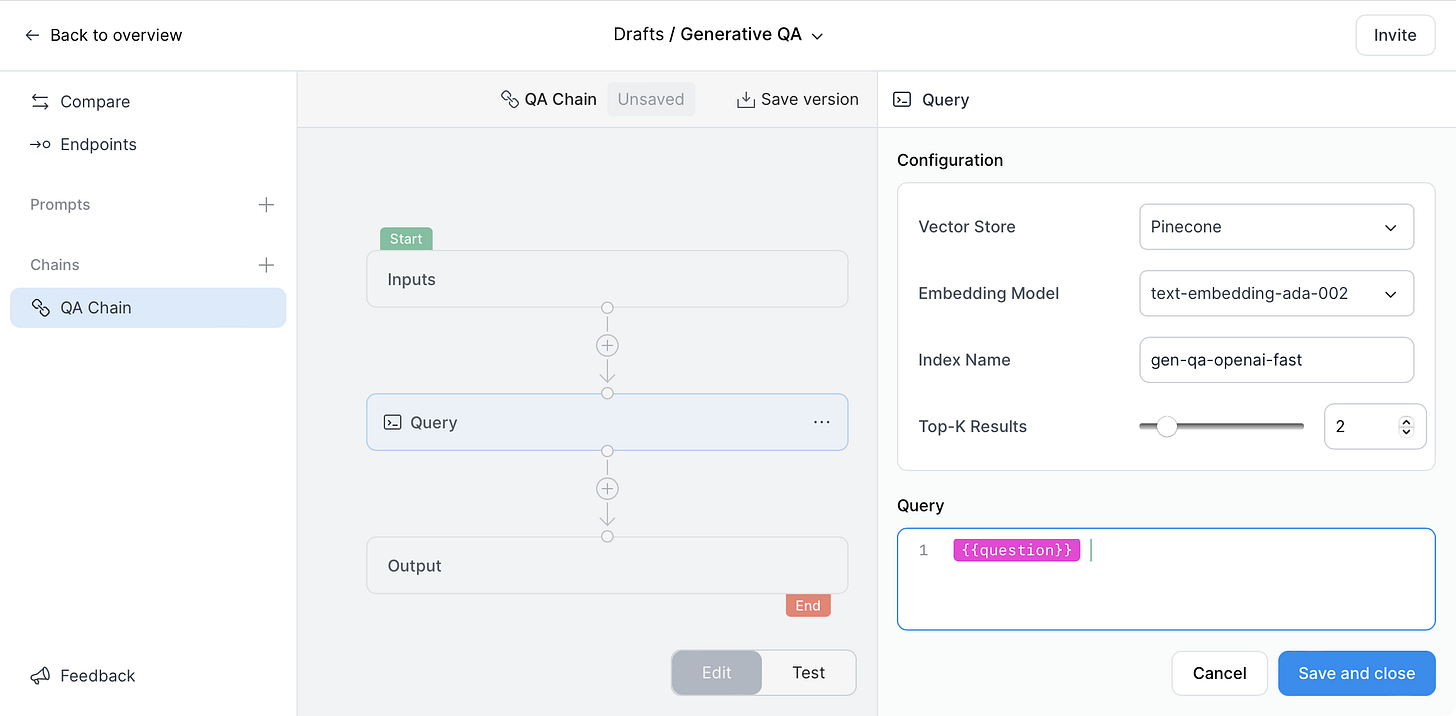

We are now ready to build a question-answering chain with two steps: one vector store query to retrieve relevant chunks of data for a given input question, and one LLM prompt where we inject those relevant data snippets to ensure an accurate response. As seen below, we’ve created a new project called Generative QA and added a new chain called QA Chain, where we can add our query step:

The configuration of our query step is pretty straightforward: we use Pinecone as our vector store and OpenAI’s default embedding model. We’ve entered the name of the Pinecone index that we created while populating the vector store earlier, and we want to retrieve the top two most relevant chunks of data for our query. The query itself is simply the user question which will be an input variable for our chain:

Next we add a basic GPT-3.5 prompt step to our chain with question and context as input variables. We’ve also mapped the output of the previous query step to the context input of the prompt:

And now we are ready to run our chain. If we switch to Test mode, we will need to provide at least one input question to run the chain with (but we could add additional questions and even test them all in parallel):

This is what the final result looks like after running the chain with our given input question (you can also inspect the results of each step separately e.g. to see the chunks of relevant data returned in the query step):

The key thing to take away is that the relevant context injected in the prompt helped ensure the accuracy of the answer without blowing up our prompt context window. And we can nicely encapsulate this logic in a simple restful endpoint for our chain. You can also extend your knowledge base by updating the vector store with more data without re-training models or having to change your LLM prompts or PlayFetch integrations.

If you want to give PlayFetch a try, please sign up for early access here. If you’d like to get in touch with us directly, please send an email to hello at playfetch dot ai.